ChatGPT: Not Just for College Research Papers

/ChatGPT has been in the news a lot recently–mostly for its alleged sentience (it’s not) and whether (or when) students using it is considered cheating.

(Image credit: Shutterstock / PopTika)

But generative AI is making its way into industry, helping with training, scenario testing, and even product development. The team at Kent is using AI for facility security and safety.

On his Substack, David Rogers has shared real scenario-based “conversations” he’s had with ChatGPT to walk through manufacturing situations.

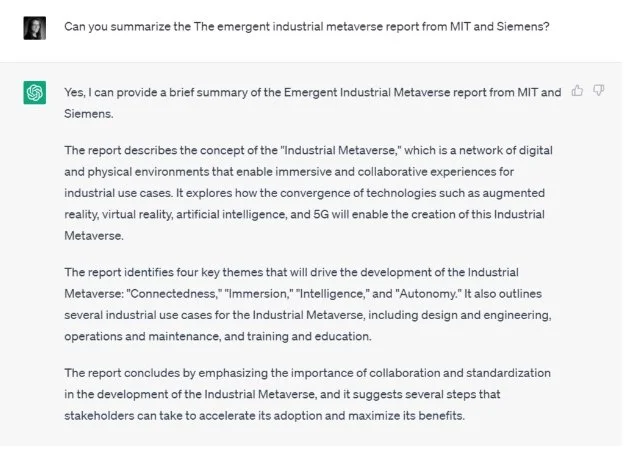

How real and useful these conversations are is TBD. For example, when I ask ChatGPT to write a summary of key findings from a recent industry report, it straight up made up fake findings and numbers.

There’s no survey data in the report and yet ChatGPT manifested these numbers:

In this similar example, ChatGPT made up key findings for the report. The word “connectedness” is nowhere in the entire document.

On LinkedIn, David J. Thul experienced similar results. The basics were decent, but deeper dives were blatantly wrong. He gives tips on how oil and gas companies can (and also shouldn’t) use ChatGPT as it exists now.

All to say: Generative AI isn’t ready to replace humans. We still need people to interpret, verify, and apply the outputs from ChatGPT. And it has a lot of learning left to do.

But artificial intelligence (AI) in general—not just generative AI—is having a big impact on the oil and gas industry and leading to a lot of conversations about best practices, skill transfer, and more.

AI is in use, and it’s likely not going away anytime soon. The key is using it responsibly and for the right applications.